Speeding up Apache MXNet with the NNPACK library

Apache MXNet is an Open Source library helping developers to build, train and re-use Deep Learning networks. In this article, I’ll show you to speed up predictions thanks to the NNPACK library. Before we dive in, let’s first discuss why we would want to do this.

The original post was based on MXNet 0.11. It was updated on April 14th, 2018 and it will now let you successfully build MXNet 1.1 with NNPACK. For more information on why this isn’t as easy as it should be: https://github.com/Maratyszcza/NNPACK/issues/135 + https://github.com/apache/incubator-mxnet/pull/9860

Training

Training is the step where a neural network learns how to correctly predict the right output for each sample in the data set. One batch at a time (typically from 32 to 256 samples), the data set is fed into the network, which then proceeds to minimise total error by adjusting weights (and sometimes hyper parameters) thanks to the backpropagation algorithm.

Going through the full data set is called an “epoch”: large networks may be trained for hundreds of epochs to reach the highest accuracy possible. This may take days or even weeks, which is where GPUs step in: thanks to their formidable parallel processing power, training time can be significantly cut down, compared to even the most powerful of CPUs.

Inference

Inference is the step where you actually use the trained network to predict a result from new data samples. You could be predicting with one sample at a time, for example trying to identify objects in a single picture like Amazon Rekognition does, or with multiple samples, for example trying to track a moving object in a video stream.

Of course, GPUs are equally efficient at inference. However, many systems are not able to accommodate them because of cost, power consumption or form factor constraints (just think of embedded systems).

Being able to run fast CPU-based inference is an important topic.

This is where the NNPACK library comes into play, as it will help us speed up CPU inference in Apache MXNet.

The NNPACK Library

NNPACK is an Open Source library available on Github. It implements operations like matrix multiplication, convolution and pooling in a highly optimised fashion.

These operations are at the core of neural networks, in particular of Convolution Neural Networks which are predominantly used to detect features in an image.

If you’re curious about the theory and math that help make these operations very fast, please refer to the research papers mentioned by the author in this Reddit post.

NNPACK is available for Linux and MacOS X platforms. It’s optimised for the Intel x64 processor with the AVX2 instruction set, as well as the ARMv7 processor with the NEON instruction set and the ARM v8.

In this post, I will use a c4.8xlarge instance running the Deep Learning AMI, which already includes some of the dependencies we need. Here’s what we going to do:

- Build the NNPACK library from source,

- Build the cpuinfo library from source,

- Build Apache MXNet from source with NNPACK and cpuinfo,

- Run some image classification benchmarks using a variety of networks.

Let’s get to work!

Building NNPACK

NNPACK uses the ninja build tool. Unfortunately, the Ubuntu repository does not host the latest version, so we need to build it from source as well.

Now let’s prepare the NNPACK build, as per instructions.

Before we actually build, we need to tweak the configuration file a bit. The reason for this is that NNPACK only builds as a static library whereas MXNET builds as a dynamic library: thus, they won’t link properly. The MXNet documentation suggests to use an older version of NNPACK, but there’s another way ;)

We need to edit the build.ninja file and the ‘-fPIC’ flag, in order to build C and C++ files as position-independent code, which is really all we need to link with the MXNet shared library.

Now, let’s build NNPACK and run some basic tests.

We’re done with NNPACK: you should see the library in ~/NNPACK/lib.

Building cpuinfo

NNPACK relies on this library to accurately detect CPU information. Build instructions are very similar.

Then, we need to edit build.ninja and update cflags and cxxflags again.

Now, let’s build cpuinfo and run some basic tests.

We’re also done with cpuinfo: you should see the library in ~/cpuinfo/lib.

Building Apache MXNet with NNPACK and cpuinfo

First, let’s install dependencies as well as the latest MXNet sources (1.1 at the time of writing). Detailed build instructions are available on the MXNet website.

Now, we need to configure the MXNet build. You should edit the make/config.mk file and set the variables below in order to include NNPACK in the build, as well as the dependencies we installed earlier.

Now, we’re ready to build MXNet. Our instance has 36 vCPUs, so let’s put them to good use.

A few minutes later, the build is complete. Let’s install our new MXNet library and its Python bindings.

We can quickly check that we have the proper version by importing MXNet in Python.

We’re all set. Time to run some benchmarks.

Benchmarks

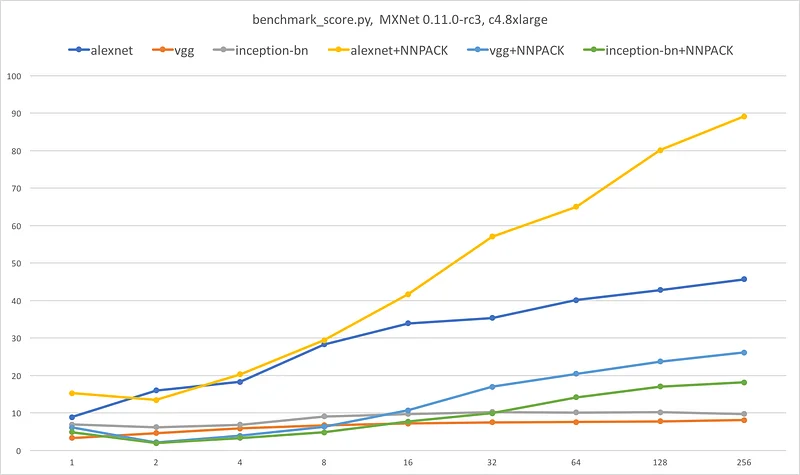

Benchmarking with a couple of images isn’t going to give us a reliable view on whether NNPACK makes a difference. Fortunately, the MXNet sources include a benchmarking script which feeds randomly generated images in a variety of batch sizes through the following models: Alexnet, VGG16, Inception-BN, Inception v3, Resnet-50 and Resnet-152.

April 14th, 2018: these numbers have not been updated. They’re still the old MXNet 0.11 numbers.

As nothing is ever simple, we need to fix a line of code in the script. C4 instances don’t have any GPU installed (which is the whole point here) and the script is unable to properly detect that fact. Here’s the modification you need to apply to~/incubator-mxnet/example/image-classification/benchmark_score.py. While we’re at it, let’s add additional batch sizes.

Time to run some benchmarks. Let’s give 8 threads to NNPACK, which is the largest recommended value.

Full results are available here. As a reference, I also ran the same script on an identical instance running the vanilla 0.11.0-rc3 MXNet (full results are available here).

As we can see on the graph above, NNPACK delivers a significant speedup for Alexnet (up to 2x), VGG (up to 3x) and Inception-BN (almost 2x).

For reasons beyond the scope of this article, NNPACK doesn’t (yet?) deliver any speedup for Inception v3 and Resnet.

Conclusion

When GPU inference is not available, adding NNPACK to Apache MXNet may be an easy option to extract more performance from your network.

As always, your mileage may vary and you should always run your own tests.

It would definitely be interesting to run the same benchmark on the upcoming c5 instances (based on the latest Intel Skylake architecture) and on a Raspberry Pi. More articles to be written :)

Thanks for reading!