Training with PyTorch on Amazon SageMaker

PyTorch is a flexible open source framework for Deep Learning experimentation. In this post, you will learn how to train PyTorch jobs on Amazon SageMaker. I’ll show you how to:

- build a custom Docker container for CPU and GPU training,

- pass parameters to a PyTorch script,

- save the trained model.

As usual, you’ll find my code on Github :)

Building a custom container

SageMaker provides a collection of built-in algorithms as well as environments for TensorFlow and MXNet… but not for PyTorch. Fortunately, developers have the option to build custom containers for training and prediction.

Obviously, a number of conventions need to be defined for SageMaker to successfully invoke a custom container:

- Name of the training and prediction scripts: by default, they should respectively be set to ‘train’ and ‘serve’, be executable and have no extension. SageMaker will start training by running ‘docker run your_container train’.

- Location of hyper parameters in the container: /opt/ml/input/config/hyperparameters.json.

- Location of input data parameters in the container: /opt/ml/input/data.

This will require some changes in our PyTorch script, the well-known example of learning MNIST with a simple CNN. As you will see in a moment, they are quite minor and you won’t have any trouble adding them to your own code.

Building a Docker container

Here’s the Docker file.

We start from the CUDA 9.0 image, which is also based on Ubuntu 16.04. This one has all the CUDA libraries that PyTorch needs. We then add Python 3 and the PyTorch packages.

Unlike MXNet, PyTorch comes in a single package that support both CPU and GPU training.

Once this is done, we clean up various caches to shrink the container size a bit. Then, we copy the PyTorch script to /opt/program with the proper name (‘train’) and we make it executable.

For more flexibility, we could write a generic launcher that would fetch the actual training script from an S3 location passed as an hyper parameter. This is left as an exercise for the reader ;)

Finally, we set the directory of our script as the work directory and add it to the path.

It’s not a long file, but as usual with these things, every detail counts.

Creating a Docker repository in Amazon ECR

SageMaker requires that the containers it fetches are hosted in Amazon ECR. Let’s create a repo and login to it.

Building and pushing our containers to ECR

OK, now it’s time to build both containers and push them to their repos. We’ll do this separately for the CPU and GPU versions. Strictly Docker stuff. Please refer to the notebook for details on variables.

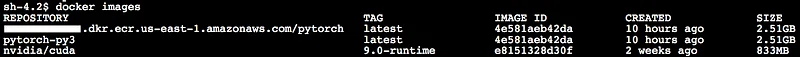

Once we’re done, things should look like this and you should also see your container in ECR.

The Docker part is over. Now let’s configure our training job in SageMaker.

Configuring the training job

This is actually quite underwhelming, which is great news: nothing really differs from training with a built-in algorithm!

First we need to upload the MNIST data set from our local machine to S3. We’ve done this many times before, nothing new here.

Then, we configure the training job by:

- selecting one of the containers we just built and setting the usual parameters for SageMaker estimators,

- passing hyper parameters to the PyTorch script.

- passing input data to the PyTorch script.

Unlike Keras, PyTorch has APIs to check if CUDA is available and to detect how many GPUs are available. Thus, we don’t need to pass this information in an hyper parameter. Multi-GPU training is also possible but requires extra work: MXNet makes it much simpler.

That’s it for training. The last part we’re missing is adapting our PyTorch script for SageMaker. Let’s get to it.

Adapting the PyTorch script for SageMaker

We need to take care of hyper parameters, input data, multi-GPU configuration, loading the data set and saving models.

Passing hyper parameters and input data configuration

As mentioned earlier, SageMaker copies hyper parameters to /opt/ml/input/config/hyperparameters.json. All we have to do is read this file, extract parameters and set default values if needed.

In a similar fashion, SageMaker copies the input data configuration to /opt/ml/input/data. We’ll handle things in exactly the same way.

In this example, I don’t need this configuration info, but this is how you’d read it if you did :)

Loading the training and validation set

When training in file mode (which is the case here), SageMaker automatically copies the data set to /opt/ml/input/<channel_name>: here, we defined the train and validation channels, so we’ll have to:

- read the MNIST files from the corresponding directories,

- build DataSet objects for the training and validation set,

- load them using the DataLoader object.

Saving the model

The very last thing we need to do once training is complete is to save the model in /opt/ml/model: SageMaker will grab all artefacts present in this directory, build a file called model.tar.gz and copy it to the S3 bucket used by the training job.

That’s it. As you can see, it’s all about interfacing your script with SageMaker input and output. The bulk of your PyTorch code doesn’t require any modification.

Running the script

Alright, let’s run this on a p3.2xlarge instance.

Let’s check the S3 bucket.

$ aws s3 ls $BUCKET/pytorch/output/pytorch-mnist-cnn-2018-06-02-08-16-11-355/output/

2018-06-02 08:20:28 86507 model.tar.gz

$ aws s3 cp $BUCKET/pytorch/output/pytorch-mnist-cnn-2018-06-02-08-16-11-355/output/ .

$ tar tvfz model.tar.gz

-rw-r--r-- 0/0 99436 2018-06-02 08:20 mnist-cnn-10.pt

Pretty cool, right? We can now use this model anywhere we like.

That’s it for today. Another (hopefully) nice example of using SageMaker to train your custom jobs on fully-managed infrastructure!

Happy to answer questions here or on Twitter. For more content, please feel free to check out my YouTube channel.

Obvious choice ;)