Johnny Pi, I am your father — part 8: reading, translating and more!

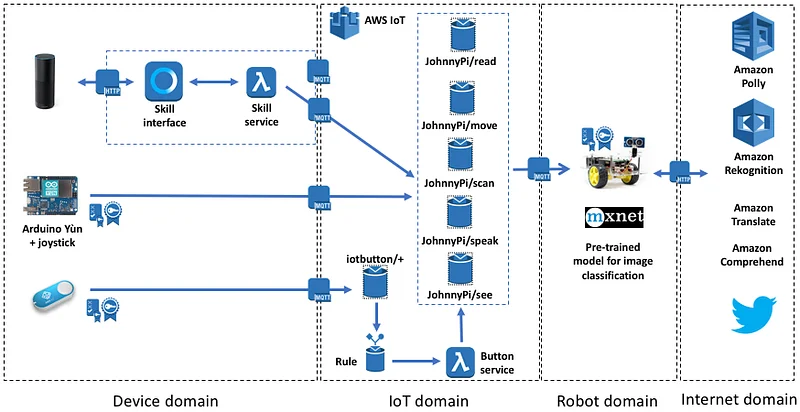

It’s been a while since part 7, where we added a custom Alexa skill to interact with our robot. Plenty has happened since then, so it’s time to teach Johnny some new things, namely:

- Speeding up local prediction,

- Recognising celebrities,

- Reading text,

- Detecting the language of a text,

- Translating text.

As usual, all code is available on Gitlab and you’ll see a video demo at the end of the post. Let’s get to work.

Speeding up local prediction

In part 5, we implemented local object classification thanks to Apache MXNet and a pre-trained model: it worked fine, albeit a little slow due to the small CPU of the Raspberry Pi.

To speed things up, I upgraded to MXNet 1.1 and built it with NNPACK, an open source acceleration library (which we already discussed).

Building MXNet 1.x on a Pi takes well over an hour, but you can get it done. Unfortunately, there is not enough memory to run parallel make (‘make -j’), so stick to ‘make’… and get some more tea, coffee or beer!

Thanks to this, Johnny can now predict a single image with the Inception v3 model in about one second, which is 3x-4x faster than before. This feels pretty instantaneous when asking for object detection.

Recognising celebrities

We already implemented face detection (part 4), so let’s now handle celebrities. This feature was added to Amazon Rekognition a while ago — and used by Sky News at the recent royal wedding :)

Let’s just use the RecognizeCelebrities API and update the function that builds the text message spoken by Johnny.

No changes to the Alexa skill: it will still ask Johnny to look for faces by posting a message to the JohnnyPi/see topic. If Johnny detects celebrities, then they will be mentioned in the voice message and in the tweet.

Quick test? Sure :)

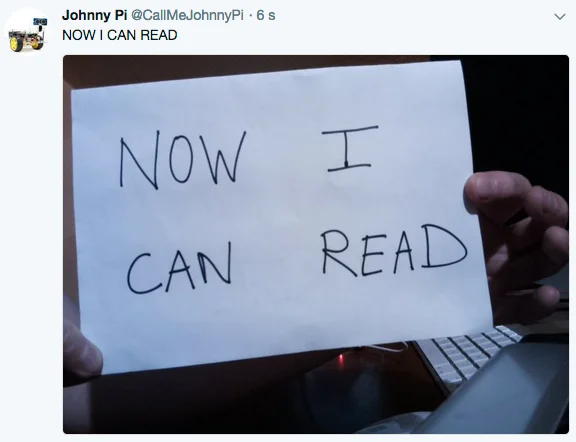

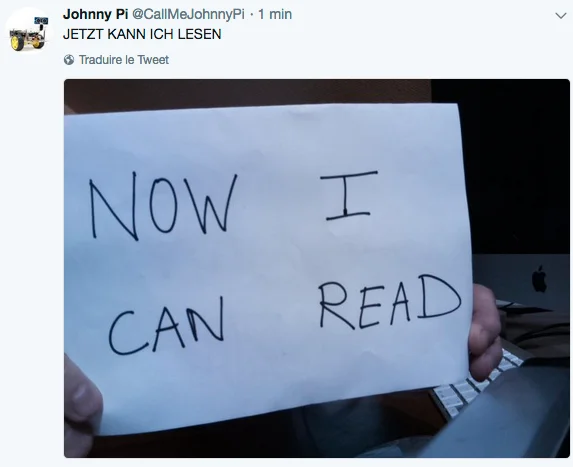

Reading text

This is another feature in Amazon Rekognition. All we need to do is to call the DetectText API and extract all lines of text. We’ll also use a new topic (JohnnyPi/read) to receive messages from the skill.

Skill-side, we need a new intent (ReadIntent, no slot needed) and an appropriate handler in the Lambda function.

Let’s try it.

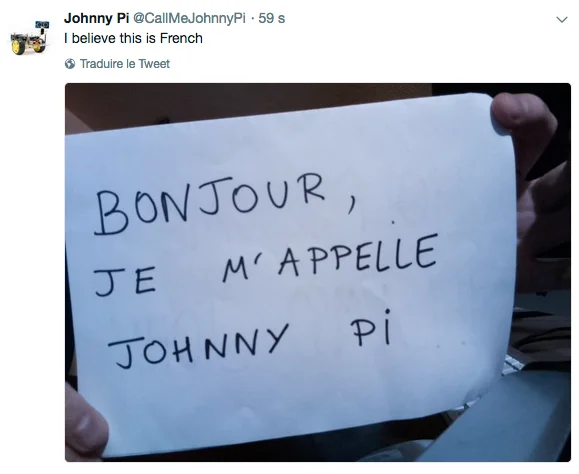

Detecting the language of a text

Amazon Comprehend is a Natural Language Processing service launched at re:Invent 2017: one of its features is the ability to detect 100 different languages.

Here, we’ll simply use the DetectDominantLanguage API as well as the JohnnyPi/read topic again (with a ‘language’ message).

Skill-side, we need to create another new intent (LanguageIntent, no slot needed) and implement the corresponding handler in the Lambda function.

Let’s try it.

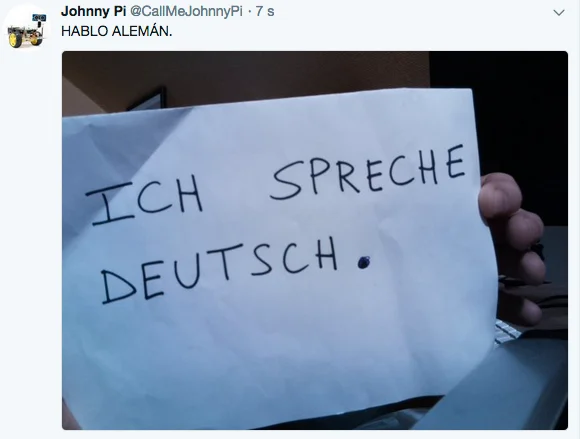

Translating text

Amazon Translate is another service launched at re:Invent 2017. At the time of writing, it can translate from English to French, Spanish, Portuguese, German, Chinese (simplified) and Arabic, and vice-versa. More languages are coming soon :)

We’ll use the TranslateText API and the JohnnyPi/read topic again (with a ‘translate DESTINATION LANGUAGE’ message). We’ll support translation for any of these language pairs: English, French, Spanish, Portuguese and German. We’ll use English as a pivot language when needed.

Polly doesn’t yet support Arabic and Chinese, which is why I’ve left them out.

Translate supports source language detection (you just use ‘auto’ as the source language), but we can’t use it here: we need to know what the source language is — Comprehend will tell us — in order to decide if we need to pivot or not.

Skill-side, there’s a little more work this time:

- We need a slot for the target language. There is a convenient pre-defined slot type named AMAZON.Language, which is exactly what we need!

- We need to validate the slot against the list of supported languages.

Let’s try English to German.

Now what about German to Spanish?

Live testing

OK, now you really want to see this live, don’t you? Of course :)

If you’d like to know more about all these services, please take a look at this recent AWS Summit talk.

Happy to answer questions here or on Twitter. For more content, please feel free to check out my YouTube channel.

Part 0: a sneak preview

Part 1: moving around

Part 2: the joystick

Part 3: cloud-based speech

Part 4: cloud-based vision

Part 5: local vision

Part 6: the IoT button

Part 7: the Alexa skill

Be good, Johnny. I’m going to need you for a few demos :)